A research perspective piece by Nancy MacKenzie, undergraduate in Biomedical Physics and Interdisciplinary Neuroscience at Portland State University

How did I get here?

I am in the final year of my biomedical physics BS and minor in interdisciplinary neuroscience at PSU. I am also a BUILD EXITO scholar. I have been performing research in my lab for close to two years. This summer, I’m working toward publishing a scientific paper. Later this year, I will be applying to Ph.D. programs in computational neuroscience.

If I stop there, all of that sounds a bit intimidating.

Not too long ago, I couldn’t imagine myself going to a university. My parents wanted me to be successful and go to college, but, as a first-generation student, there also wasn’t a clear pathway there. I liked learning and would go down what I call “research holes” with my interests, but challenging school and home dynamics led to me dropping out of high school. It took a while to get my diploma, and it seemed like getting a higher-ed degree wasn’t in the cards for me.

We all have different experiences and interface with different structures that lead to developing certain narratives about ourselves. Time and experience continue to change how those narratives look and what ends up winning out. It took a while for my researching tendencies to override my idea of what I was capable of – something that’s still a battle.

However, I went for it and filed my Free Application for Federal Student Aid, or FAFSA. Well, I filed it twice. The following year, I was ready to commit to finding a path to research. I started at Portland Community College (PCC), not entirely sure of my major.

LEARN MORE: How Financial Aid Works

BUILD EXITO

It was during my second term at PCC that I first saw the BUILD EXITO flyer.

BUILD EXITO is an undergraduate biomedical research training program funded by the NIH. BUILD (Building Infrastructure Leading to Diversity) is an initiative that focuses on increasing diverse perspectives within research by creating a pathway to research for traditionally underrepresented students. Students in the program, aka BUILD or EXITO scholars, are funneled in from multiple partner schools – community colleges in the Pacific Northwest, Alaska, Hawaii, and U.S. Pacific Islands to Portland State University. Scholars have the opportunity to perform research at PSU or OHSU through RLCs or Research Learning Communities. Click here for current RLCs!

I was so excited because this could be my opportunity to cement this idea of becoming a researcher. So, I did the thing where I asked two of my instructors, who knew me for one term, whether they would write me a reference (spoiler, they did). I applied, nervously interviewed, and…

It’s through BUILD EXITO that I started working at my RLC, teuscher.:Lab, led by Dr. Christof Teuscher.

I chose a computational lab because I believe that the future of neuroscience research will have significant crossover with computer science and engineering. We can use machines to solve problems and look for larger patterns our brains can’t comprehend, akin to using electron microscopes to move past our optical restrictions.

“We use a radical interdisciplinary approach and apply tools from computer science, computer engineering, physics, biology, complex systems science, and cognitive science to the study and the design of next generation computing models and architectures.”

— teuscher-lab.com

Projects vary across the lab, with some working on software, hardware, or bridging between. Hardware can be thought of as the physical components, while the software gives instructions for how the physical components should operate. Current neuroscience-related research in the lab includes novel computing (more on that later) and designing neuromorphic, or brain-inspired, hardware.

Click here to read about the recent publication in Science on reconfigurable neuromorphic devices.

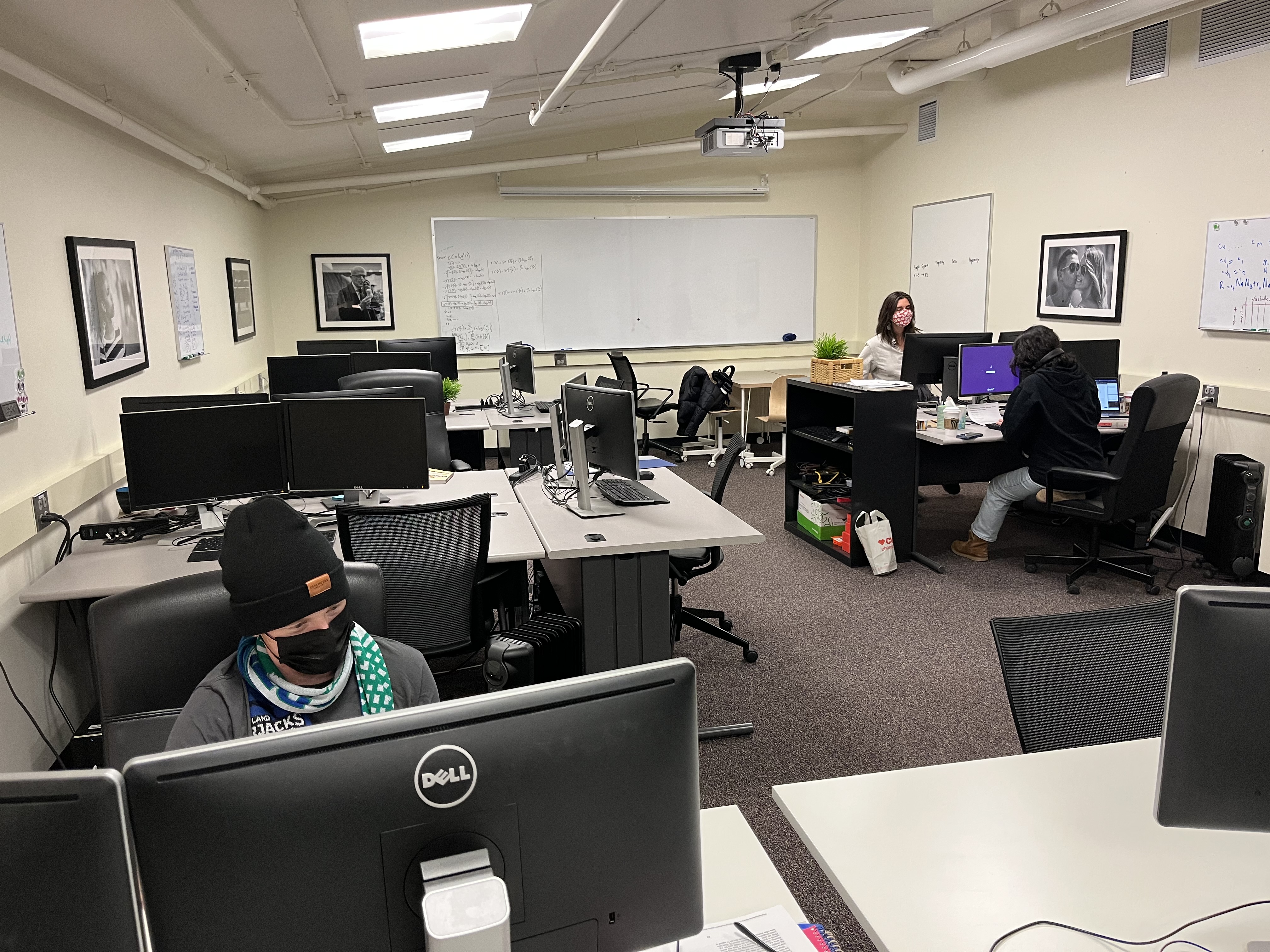

If you’re interested in computational research opportunities, every summer, Dr. Teuscher holds Computational Modeling Serving the Community, an NSF-funded Research Experience for Undergraduates (REU), and altREU. Both research experiences are framed around computational modeling that serves the community.

In the NSF-funded Computational Modeling REU, Participants work with faculty mentors on identified issues or identify issues in the community that they are passionate about solving in altREU, and learn how to use computational models to do so.

What motivates my work in the lab

I am interested in the interplay between experience and neural architecture and the diversity between baseline architectures, aka neurodiversity. If you look up neurodiversity, one of the first articles that pop up is put out by Harvard Health, and I think they sum it up pretty nicely:

“Neurodiversity describes the idea that people experience and interact with the world around them in many different ways; there is no one “right” way of thinking, learning, and behaving, and differences are not viewed as deficits.”

— Baumer & Frueh

In the lab, I use machine learning, specifically artificial neural networks (ANNs), to explore how information is processed and stored during learning. Given a task, or a learning experience, how is the information processed and stored to arrive at an answer? How does that vary according to the task at hand – spatial, temporal?

To more clearly see this, we use a genetic algorithm to start with a population of small, varying neural networks and grow them according to how well they solve a given task. We can then look at the resultant connections, the number of neurons, etc., that grew from solving the task.

What exactly is machine learning, a neural network..?

I came into the lab with the beginnings of an interest in machine learning. I didn’t know Python. I had heard of it through another scholar in BUILD EXITO and later found that Python is essentially the language of choice these days when it comes to Science, Technology, Engineering, and Math (STEM) fields. R is also a popular statistical language in research that you may have used or heard of.

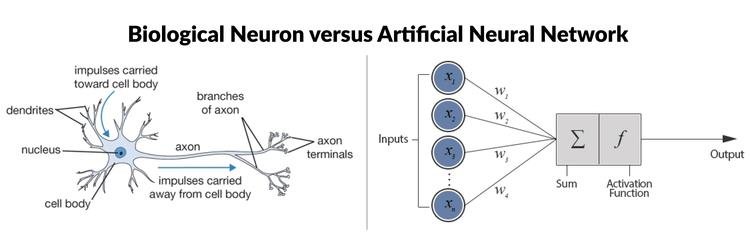

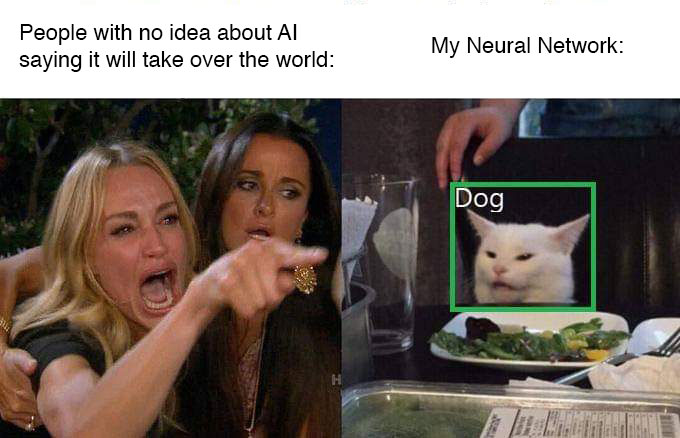

Machine learning, or often ML for short, is a field focused on developing systems that can learn and adapt by utilizing statistics and patterns in data without explicit programming. Artificial neural networks (ANNs) fall within this field.

ANNs are modeled after biological neural networks in animal brains. Nodes or neurons transmit and receive signals through weighted connections. The weight of a connection adjusts the strength of the signal as the network learns. This learning occurs by training the network – giving it a target to compare its output against.

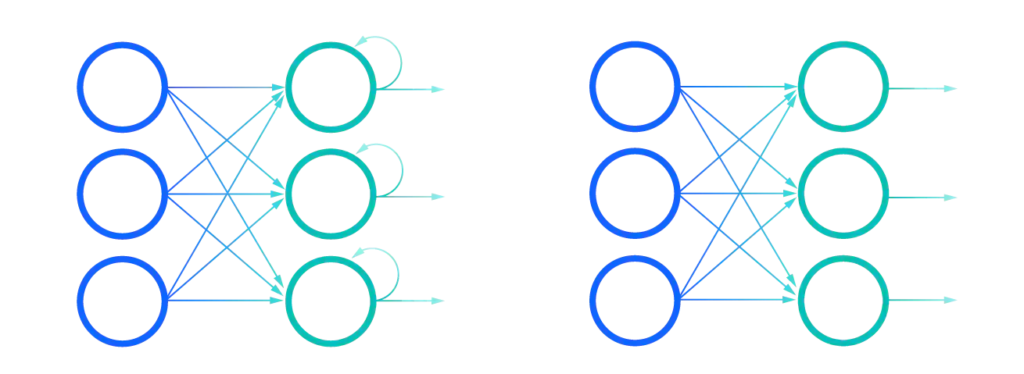

A traditional Artificial Neural Network (ANN) trains all weighted connections between neurons. The quantity of neurons or nodes must be pre-chosen. Information moves in one direction, otherwise known as a feed-forward network.

Recurrent neural networks (RNNs) improve on this by incorporating feedback loops where a node can be connected to itself. These recurrent connections allow the nodes to possess memory and process sequential tasks. The quantity of nodes/neurons must be pre-chosen.

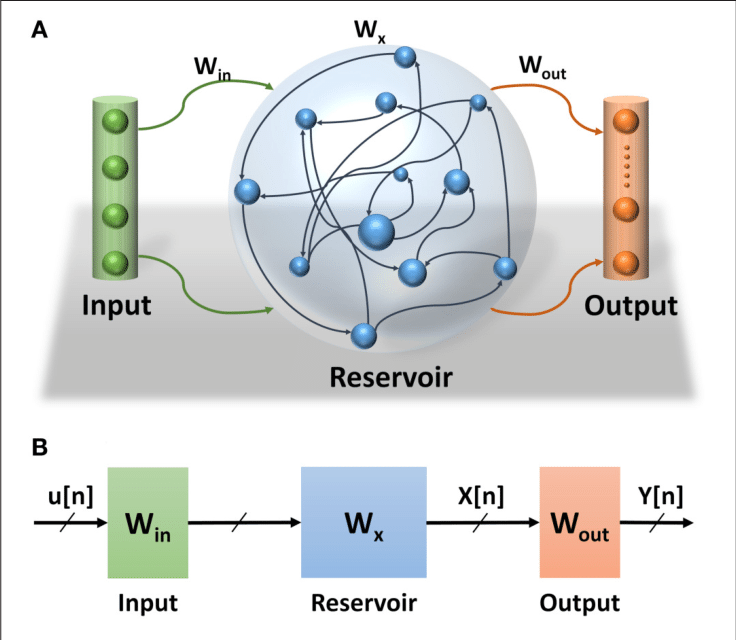

Reservoir Computers (RCs) are a type of RNN that improves learning/training efficiency. Connections between nodes are sparse and fixed. Compared to the traditional ANN, only the output weights need to be trained. The quantity of nodes/neurons must be pre-chosen. There are many papers on the use of RCs in understanding the brain. RCs have been found to show properties similar to neural dynamics in the prefrontal cortex by Enel et al. (2016), and optimal modularity and memory capacity in RCs have been explored by Rodriguez, Izquierdo & Ahn (2019), among other studies.

The type of RC we’re using is an Echo State Network (ESN).

As we go down the line, the networks improve in their ability. However, you may have noticed a theme; the number of neurons in our simulated network, or its size, must still be pre-chosen.

Using genetic algorithms to evolve network size and connectivity

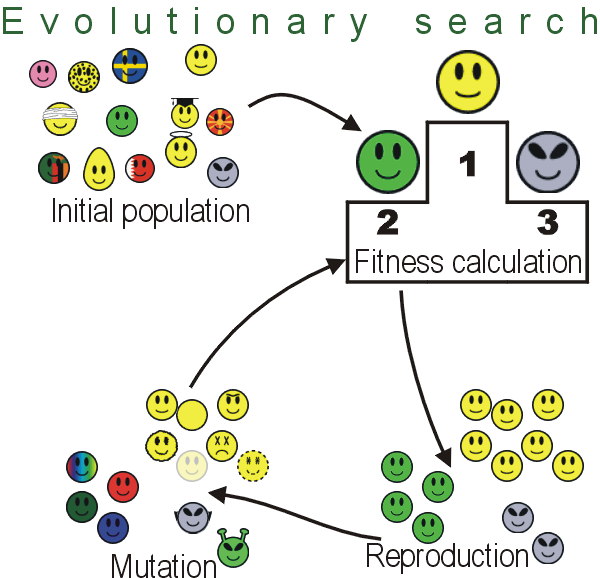

An algorithm is essentially a set of instructions. Genetic algorithms take inspiration from biology in their set of instructions. A randomized set of solutions to a problem is called the population. For each generation, each potential solution in the population is tested for how well it solves the given problem and its performance is recorded. If the desired performance is not reached, a new generation is created using biologically-inspired operators such as selection of the fittest (most accurate) solutions, crossover or blending of solutions, and mutation – imposing random probability of change, to optimize the next set of solutions.

The genetic algorithm we’re using is Deep HyperNEAT (DHN). In DHN, mutation is the main form of growth, adding depth (new layers of neurons or nodes), breadth (parallel pathways), and connectivity between neurons.

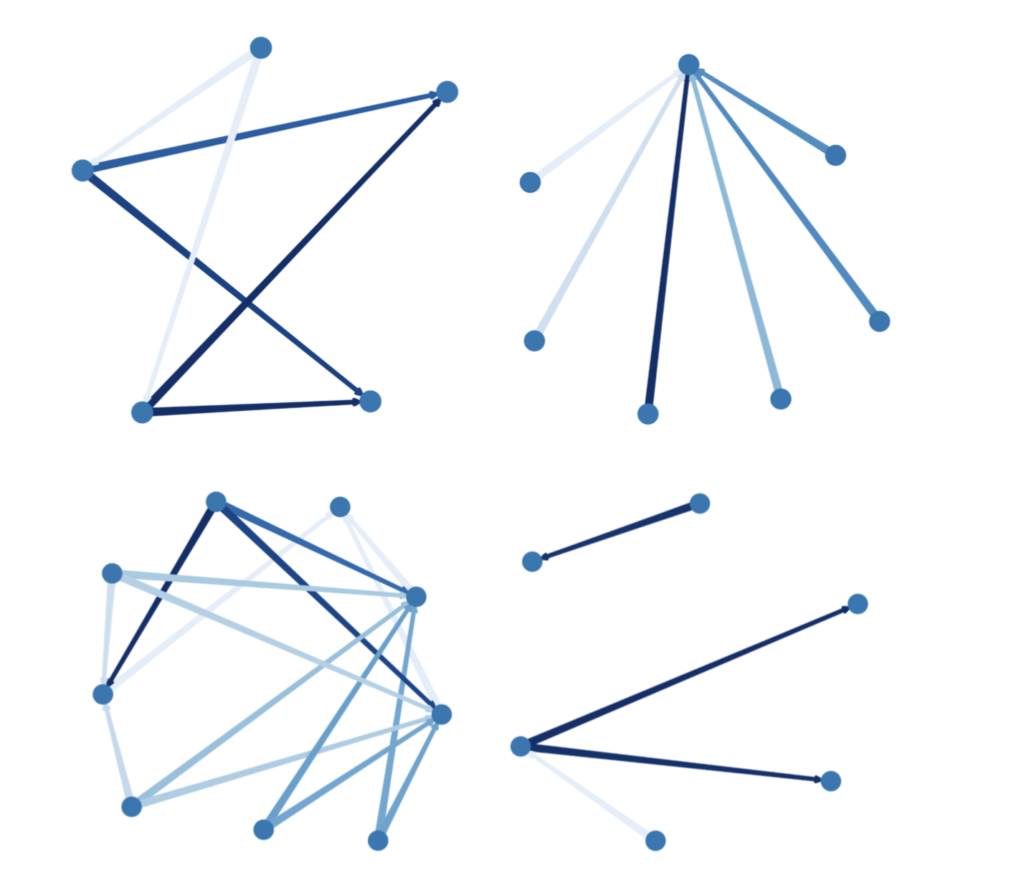

By combining the genetic algorithm Deep HyperNEAT (DHN) with an ESN, we can generate a population of minimal architectures, which we test as reservoirs in an Echo State Network (ESN), giving each a classification task to learn. Given a set of inputs representing the task, the network determines the input pattern, outputting its answer. An example classification task is answering whether a given pattern is a horizontal or vertical line. We then perform many runs to capture the larger behavior, which we can analyze to explore how many neurons it takes to solve the problem and how they are connected.

Starting from minimal architectures, grown over time and only to the point of the complexity required by the task, we can see and analyze architecture reflective of the task. Below are four high-performing architectures, showing the diversity in architecture that may arrive at a solution.

Over the summer, we’ll continue to analyze the resultant architectures as we train them on various tasks. To get a more detailed review of the current state of my research, click here to view my poster on evolving neural networks using a genetic algorithm from this year’s PSU Research Symposium.

What a typical day is like and what I’ve learned about research

Especially with COVID, my work has been mostly remote for the last two years. From the start, there has been a lot of autonomy in the lab. I express my interests and my PI helps guide me based on his knowledge of the field. We meet weekly to figure out next steps, troubleshoot the sometimes numerous bugs, and discuss results. When I complete my hours is flexible, as long as I’m staying on course with our plan of action. Sometimes, a week or a few days between meetings has meant I’m in a place where I have no idea what I’m doing.

When the scope of the problem gets too big, breaking down the process into simpler steps helps me look at what it takes to solve the problem. The big picture can be vague and overwhelming sometimes. Where can you start, or what do you know? Write it down! I regularly re-learn this one.

Through these two years, I’ve also learned and re-learned that research is a patient process. It takes time to get trained and acclimated in a lab. In my case, it was an almost entirely new world coming from a different department with little exposure to Python or ML. It took me some extra time to work on my basic translating skills between how I was used to thinking and expressing myself and how machines process information. Now I sometimes laugh at how often I consider things using “if-then” statements – a conditional statement common to programming logic.

And yet I still regularly feel imposter-y – something I feel is important to mention as I think it’s a challenge many of us face. I was talking the other day about how I think I am regularly trying to prove my utility and ability. However, the more I learn, the more I am exposed to all there is to learn. I think that can easily propagate into a feeling of not knowing enough or being an imposter. There is an interview with a famous Nobel Prize winning physicist, Richard Feynman, that comforts me in this regard,

“I was an ordinary person who studied hard. There are no miracle people. It just happens they got interested in this thing and they learned all this stuff. They’re just people.”

— Richard Feynman

If you’re curious to hear more about my entrance into the lab from another department, I was interviewed by Emily Hahn, alongside Ph.D. student Sandy Dash and my PI, Dr. Christof Teuscher, which you can read here.

Linking opportunities

Get involved with research

Check out the following links to get involved with a local research program or find an REU that aligns with your interests.

- McNair

- LSAMP

- BUILD EXITO

- OHSU Vollum/NGP Undergraduate Summer Research Program

- Research Opportunities for Undergraduates

Many peers I know have also had great luck with gaining internships and research experience in labs by talking to Principal Investigators (PIs) who perform interesting research. Don’t be afraid to reach out!

Learn Python

Some basic programming skills are a huge asset and will be increasingly valuable in research! Check out these links to start exploring.

- Python Tutorial – Python Full Course for Beginners – My PI recommended this in-depth intro to Python when I first started at the lab. It is divided into chapters on YouTube. This means you can do a little at a time and click back where you left off!

Coursera’s Programming for Everybody – An intro to Python programming course. I took this course, which makes me a certified Pythonista, and you can be one too.

Machine Learning and Neural Networks

The material I started with and/or currently use. Also, regular googling (or your search engine of choice) and YouTubing is your friend!

- Tariq Rashid’s Make Your Own Neural Network – This is an amazing introduction to neural networks! This was recommended by my PI and I can’t recommend it enough. There is also an online module version of this through educative.io, which you can access for free through the GitHub Student Developer Pack (a must-have for all the free access to courses and software).

Machine Learning Mastery – I regularly visit this site for tutorials.

Contact

If you’re interested in talking more about research, whether computational, neuroscience or otherwise; feel free to reach out to me at nan26@pdx.edu.